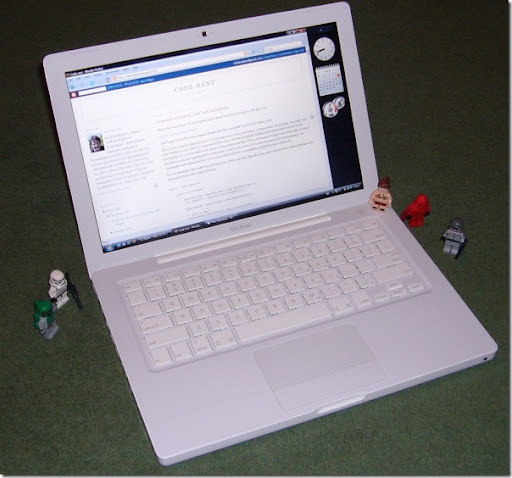

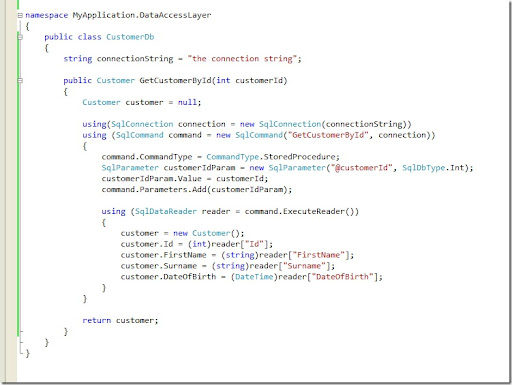

I recently wanted to write a test for this piece of code using Rhino Mocks:

public void Register(string username, string password, string posterName)

{

User user = new User()

{

Name = username,

Password = password,

Role = Role.Poster

};

userRepository.Save(user);

Poster poster = new Poster()

{

Name = posterName,

ContactName = username,

PosterUsers = new System.Collections.Generic.List<PosterUser>() { new PosterUser() { User = user } }

};

posterRepository.Save(poster);

}

As you can see, it simply takes some parameters: username, password, posterName and then constructs a User object which it passes to the Save method of a userRepository and a Poster object which is passed to the Save method of a posterRepository. Both userRepository and posterRepository are created using Inversion of Control. Here's my initial attempt at a unit test for the Register method:

[Test]

public void ReigsterShouldSaveNewUserAndPoster()

{

string username = "freddy";

string password = "fr0dd1";

string posterName = "the works";

using (mocks.Record())

{

// how do get the user object that should be passed to userRepository ???

//userRepository.Save(????);

//posterRepository.Save(????);

}

using (mocks.Playback())

{

posterLoginController.Register(username, password, posterName);

// here I need to assert that the correct User and Poster objects were passed to the

// repository mocks

}

}

I couldn't see how to get the object that was passed to my mock instances of userRepository and posterRepository. I needed some way of capturing the arguments to the mock objects so that I could assert they were correct in the playback part of the test.

Alternatively, I briefly considered using abstract factories to create my user and repository:

public void Register(string username, string password, string posterName)

{

User user = userFactory.Create(username, password, Role.Poster);

userRepository.Save(user);

Poster poster = posterFactory.Create(posterName, username, user);

posterRepository.Save(poster);

}

Then I could have written the tests like this:

[Test]

public void ReigsterShouldSaveNewUserAndPoster()

{

string username = "freddy";

string password = "fr0dd1";

string posterName = "the works";

using (mocks.Record())

{

User user = new User();

Expect.Call(userFactory.Create(username, password, Role.Poster)).Return(user);

userRepository.Save(user);

Poster poster = new Poster();

Expect.Call(posterFactory.Create(posterName, username, user)).Return(poster);

posterRepository.Save(poster);

}

using (mocks.Playback())

{

posterLoginController.Register(username, password, posterName);

}

}

It makes for a very clean test and it's forced me to separate out the concerns of creating new instances of User and Poster and the co-ordination of creating and saving them which is what the controller method 'Register' is really about. But in this case I just felt it was overkill to create abstract factories just to make my tests neat.

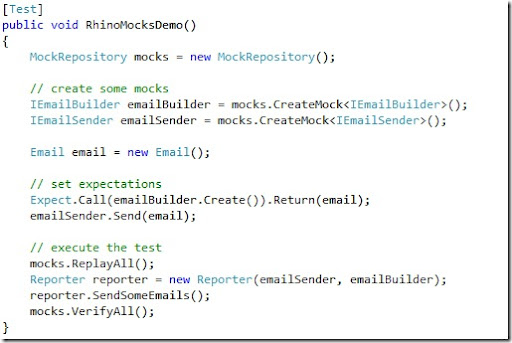

A bit more digging in the Rhino Mocks documentation revealed Callbacks. They allow you to create a delegate to do custom validation of the mocked method call. It effectively allows you to override Rhino Mocks' usual processing and plug in your own. The delegate has to have the same input parameters as the method it's validating and return a boolean; true if your test passes; false if it fails.

It's quite easy to use callbacks with the Linq Func delegate and Lambda expressions, although it took me a few attempts to work it all out. Here's the test:

[Test]

public void ReigsterShouldSaveNewUserAndPoster()

{

string username = "freddy";

string password = "fr0dd1";

string posterName = "the works";

User user = null;

Poster poster = null;

using (mocks.Record())

{

userRepository.Save(null);

LastCall.Callback(new Func<User, bool>( u => { user = u; return true; }));

posterRepository.Save(null);

LastCall.Callback(new Func<Poster, bool>(p => { poster = p; return true; }));

}

using (mocks.Playback())

{

posterLoginController.Register(username, password, posterName);

Assert.AreEqual(username, user.Name);

Assert.AreEqual(password, user.Password);

Assert.AreEqual(posterName, poster.Name);

Assert.AreEqual(user, poster.PosterUsers[0].User);

}

}

It's quite awkward syntax and it's not at all obvious how you'd do this from the Rhino Mocks API. I don't like the LastCall syntax either, but from this blog post it looks like that something that Oren is working on. There also Moq, which is a new mocking framework that fully leverages the new C# language features. Definitely something to keep an eye on.